![]()

An unsettling story is being played out in the field of tech. It touches the boundaries of privacy and personal freedom—a bonsai version of China’s plan by 2021 to assign a grade to all 1.3 billion citizens on their “social behavior.” More and more, tech AI companies are selling recruitment technology to both large employers and individuals (to choose a babysitter for example) that assess candidates not only on their online history but also on an analysis of their voice tone and facial movements to detect “bad attitudes.” Algorithm biases + our past are thus becoming our future. How will this affect our behavior? By a necessity to conform, as a starter…

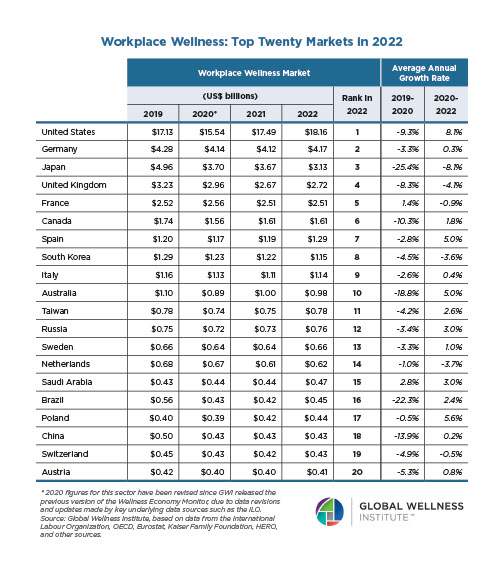

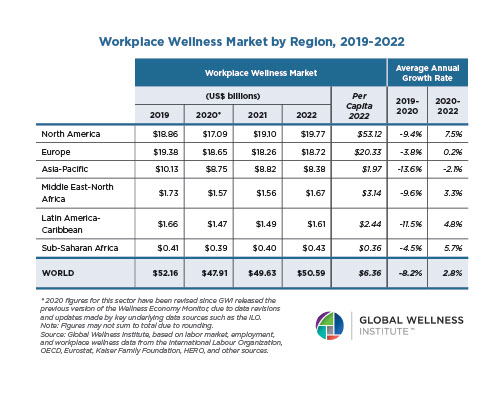

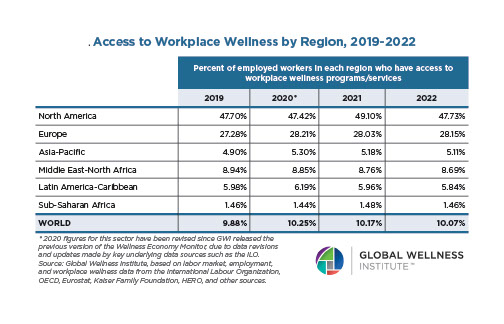

There is an obvious wellness twist to this story. On average, we are now more and more concerned with our own wellness and willing to measure many aspects of it (how much we exercise, how we sleep, whether we meditate, what we eat, etc.). But increasingly this is becoming our employers’ preoccupation too (in the US it now constitutes a $7 billion a year industry). Wellness perks and corporate wellness programs are thus more and more widespread and mainstream, but they raise a fundamental future issue about privacy. The concern is that the data will ultimately be shared with insurance companies that cannot discriminate on prior health conditions but can use data to incentivize wellness behavior that brings their costs down.

How does this play out? Let’s take the example of United Healthcare (a US insurance company). It “offers” its customers up to $4 a day in credits if they meet daily three goals monitored by a wearable. These are (1) frequency—walking 6 sets of 500 steps in less than 7 minutes while spacing each set at least an hour apart from each other), (2) intensity—3,000 steps in a single 30-minute period, and (3) tenacity—10,000 steps a day. Those who adhere to the program may end up sleepwalking into a data-driven panopticon! The risk for individuals is that the abundance of data combined with AI and machine learning will easily blur the analysis between people’s past and future conditions.

Apart from privacy concerns, what are some of the implications? Here is an important one: Much sooner than we realize, wellness incentives offered to employees may discriminate against those who are “unwell” or not as “well” as their employers would like: in short, those who are poorer and/or less educated (the correlation between increasing socio-economic status and increasing levels of subjective wellbeing is well established in the academic literature). The wellness industry must be at the forefront in terms of thinking about these issues and addressing them in an inclusive and fair manner. It’s a space to watch closely.